SERVICES

“Digital human” refers to using CG

to create human characters indistinguishable

from live-action characters

“Digital human” does not refer to using a specific technology. Rather, simply put, it refers to “using CG to create human characters indistinguishable from live-action characters.”

For example, the idea could be to replace someone who does not exist in the real world (for instance, someone who has died) using CG and make them appear to be alive. This was done in a beverage TV commercial (2017) featuring Yusaku Matsuda in Japan as well. Overseas, there is a famous chocolate commercial featuring Audrey Hepburn (2013).

In the midst of these examples, we also started our “digital human” research and development 5 years ago.

At that time, people might have thought,

“Is that a live-action composite?”

What are some real-world examples of “digital humans”?

The history of CG is decades long, with constant attempts to create realistic human beings, but the first instance of a quality that is comparable to a live-action version that could bear the name “digital human” would, in my opinion, be in the movie, The Curious Case of Benjamin Button, (2008) starring Brad Pitt. The audience, of course, didn't think of it as a “digital human”, but they must have been wondering how Brad Pitt's 80-year-old face was attached to a child's body. At that time, people might have thought, “Is that a live-action composite?”

Recently, young Linda Hamilton and Arnold Schwarzenegger were revived in CG in Terminator: Dark Fate (2019). And the scene featuring Tom Hardy in Mad Max: Fury Road (2015) on a motorcycle apparently missed the schedule and ended up with only the parts from the neck up being CG.

The most recent and intense movie was Gemini Man (2019), starring Will Smith, who was all CG in his younger self. Netflix also made Robert De Niro and Al Pacino young again with CG on The Irishman. These are all “digital humans”. I think there was an enormous composition process for the number of cuts in The Irishman.

All I could say was, “We can’t [do Benjamin Button].”

That stuck with me

All I could say was,

“We can’t [do Benjamin Button].”

That stuck with me

Why did you decide to do digital human research at Digital Frontier?

It was triggered by the words of a certain cameraman. When we made the GANTZ (2011) movie with director Shinsuke Sato, we met cinematographer Taro Kawazu for the first time and he asked, “Can you do something like Benjamin Button with your CG?” (laughs). Of course, I had seen the movie and I was very interested in the way it showed Brad Pitt getting younger with time, but at that time, all I could say was, “We can't.” That stuck with me.

A few years after this event, I was able to secure a budget for research and development and started the long-sought-after “digital human” project. That was about 5 years ago.

First, I thought I would resurrect the dead.

First, I thought

I would resurrect the dead.

Please tell us about the making of Shintaro Katsu as a “digital human” in the short film, Zatoichi Zero, that Digital Frontier actually took on.

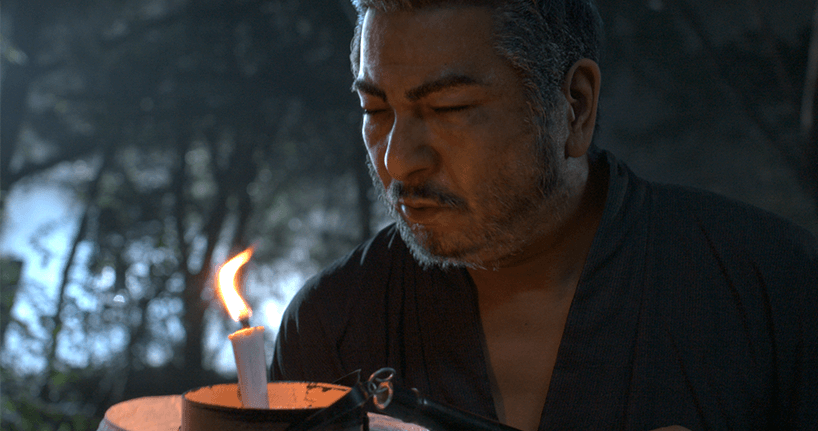

I wondered what would be something meaningful we could do with “digital humans,” and my first thought was to “resurrect the dead.” I personally had a fondness for Shintaro Katsu's Zatoichi (1989), so I decided to resurrect him.

This turned out well in the end, but Shintaro Katsu has short hair in Zatoichi, which required less R&D (research and development) for hair (swaying and fluttering) simulation. Also, because Zatoichi is blind and has all white eyes, R&D for the eyes was not necessary. R&D was minimal in the end.

To make them look like live-action, you need to research the hair for hair, the eyes for eyes, and the skin for skin, and each of these takes a lot of time and effort.

After that, Shintaro Katsu's realistically made face is placed on the moving body of an actor with a body type that resembles Katsu's. However, since this was a Zatoichi project, an actor with the ability to sword fight was chosen over someone with a similar body type. I asked a sword fighter I knew who was not so tall but had a solid build. I think he was actually shorter than Shintaro Katsu, but with a kimono for a costume, his body was hidden, so the top priority was the ability to sword fight.

After that, Shintaro Katsu's realistically made face is placed on the moving body of an actor with a body type that resembles Katsu's. However, since this was a Zatoichi project, an actor with the ability to sword fight was chosen over someone with a similar body type. I asked a sword fighter I knew who was not so tall but had a solid build. I think he was actually shorter than Shintaro Katsu, but with a kimono for a costume, his body was hidden, so the top priority was the ability to sword fight.

The skin of someone other than the person who was responsible for movement was scanned. The goalpost was the skin of Shintaro Katsu, who appeared in the last movie of Zatoichi in 1989, and when I searched the Internet for someone close to him, I found a bodybuilder in Osaka. At the time, the only scanner was in Taiwan, so I asked the body builder to come to Taiwan to take pictures of his facial expressions and skin with a dome-shaped machine called LightStage using several cameras at 360 degrees.

LightStage is capable of capturing fine details close to seeing the skin through a microscope. Failing to collect information at such a level could make it difficult to approximate the realistic details that could be mistaken for live action.

For a detailed article regarding LightStage, click here

・DF TALK「LightStage解体新書」 ・DF TALK「LightStage 解体新書 Part2」

・DF TALK「LightStage 解体新書 Part2」

Image of skin being scanned on LightStage

Image of skin being scanned on LightStage

This is the finished Zatoichi Zero

No other company in Japan has been doing “digital human”

research for 5 years.

No other company in Japan

has been doing “digital human”

research for 5 years.

How is the “digital human” technology that you do at Digital Frontier different from others?

Since “digital human” is a collection of technologies, I don't think there is a difference as compared to other companies, but one of our strengths is that we have a long history of research in this genre and have the technology to bring to life CG models of a quality that appears to be live action.

Considering the case where the neck up is replaced with a “digital human,” I think that whether the composite appears real depends on facial expressions. With a still image, the texture of the skin stays as it is and proper reflections of light on the skin allows for a convincing simulation, close to a real-life image in terms of CG, but videos are a different story.

Human facial expressions vary widely, and the emotions expressed differ completely by the movements of a very small number of facial muscles, so low quality here will prevent the video from looking like a live-action film.

At our company, we make one basic facial pattern and about 80 facial expression patterns for it. From among them, we combine, for example, facial expression A at 30%, B at 40%, and C at 30% to create the desired facial expression.

So, if there are few base patterns, the details are poor and it won't appear to be live action. It takes manpower, experience, and an accumulation of years of research to reach a level of quality that resembles live action.

It was five years ago that I started doing "digital humans" at Digital Frontier, and to my knowledge, there no other company in Japan has been doing "digital human" research for 5 years.

“Persons that exist/existed” and “non-existent persons”

“Persons that exist/existed” and

“non-existent persons”

When someone comes saying, “I want to use digital humans”, how do you proceed?

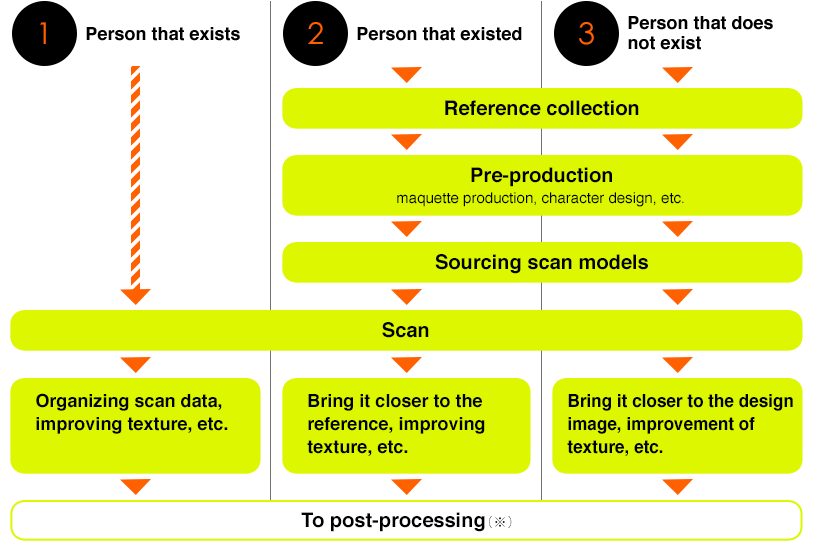

First of all, I think there are 3 patterns in the product. The first is a “person that exists”, a pattern that converts a living person into CG as she or he is. The second is a pattern that restores the appearance of a “person that existed”, that is, the deceased (or lived in the past) at a certain point in time, using CG based on available materials. The third is a “non-existent person”. This pattern involves designing a human from scratch.

Let me explain the process of creating a "digital human" head model based on actual cases.

Pattern

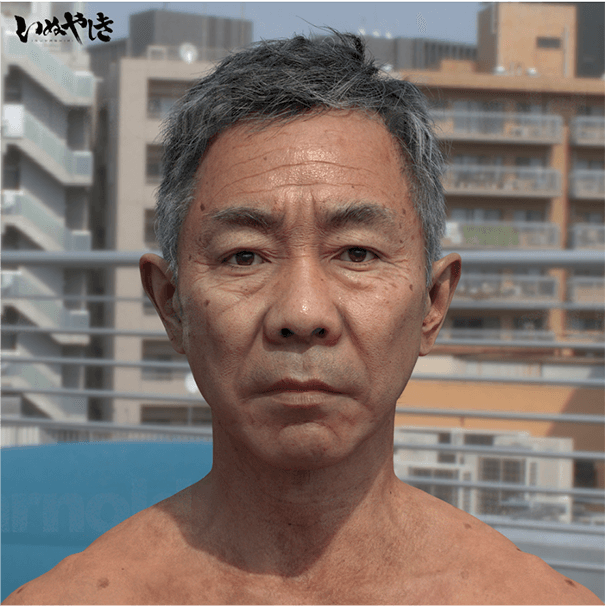

Person that exists: Inuyashiki

Noritake Kinashi, the protagonist “digital human” in Inuyashiki (2018), was actually present, so he was scanned wearing the character’s makeup and texture and shape photographed.

Pattern

Person that existed: Zatoichi Zero

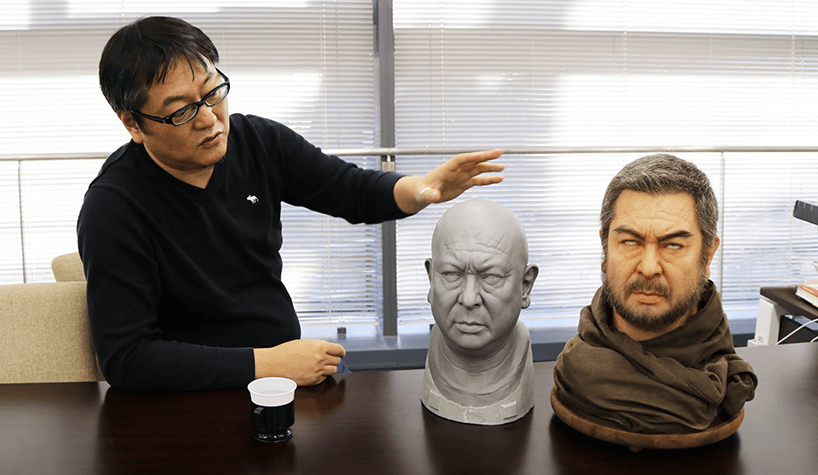

Zatoichi Zero was our first “digital human” production and we wanted to do everything we could to improve quality, so we asked Kazuhiro (Kazuhiro Tsuji) who won the Makeup & Hair Styling Award at the 2020 Academy Awards to make a maquette (a model make of silicon or clay) for Shintaro Katsu's head.

We made a CG model by scanning this maquette and the person who resembles Shintaro Katsu, as I mentioned in the previous section.

Since the person of whom to create a correct model is no longer around, there is a greater cost than for pattern 1 for the pre-production (pre-process of CG production) and modeling process.

Pattern

Non-existent person

Currently, a project to create an original female character is underway in-house, and what we are doing is making several composite faces and going with a face we like. The process involves creating something like a montage photo, making a character we like, and then making it three-dimensional.

Again, there is no correct three-dimensional object, so shape adjustment is left to the designer.

As for scanning, the staff used the Internet and SNS to find people who vaguely resembled the synthesized face and with facial parts similar to the synthesized face, and we photographed skin information and facial expressions. We start modeling work based on that data.

Production process of the above 3 patterns

* After the production of the head is completed, it is ready to be combined with the live-action materials through processing such as setup and facial target creation.

* After the production of the head is completed, it is ready to be combined with the live-action materials through processing such as setup and facial target creation.

Pursuing the question,

“What do humans perceive as ‘human’?”

ursuing the question,

“What do humans perceive as

‘human’?”

What kind of development do you want to go into in the future?

I would like to work on a film production of original “digital human” characters. At the same time, I would like to promote real-time animation and rendering (to calculate and generate CG), and casually to introduce "digital humans” on SNS.

Even if you try to create screen time on pre-rendered video works and movies like we’ve done in previous projects, the work is enormous and costly, so there are few opportunities to show them off. So it is many times more appealing to make characters that move crisply in real time and release them to the world. Also, if not only the face but also the whole body including clothes can be made into CG in real time, the character becomes able to traverse media and can appear anywhere. I’m hoping that such a day will come by the end of the year (laughs).

Also, something I would like entertainment productions personnel to consider is having actors co-star with their younger selves, if we can scan actors when they are young, for example. So this means it would be possible to see a new historical drama in which Shintaro Katsu, Toshiro Mifune, and Yusaku Matsuda co-star. I think it is doable if there is a budget for it (laughs). And I think it would be a shame not to do something so interesting.

One of the issues we have is the problem of voice recording. That goes beyond the scope of our production ability, so I think that the process would involve partnering with another company and have voice actors play to have something akin to animation dubbing with a voice changer.

Naturally, improving the appearance and quality of the performance is the eternal theme of CG. The question of “What do humans recognize as ‘human’?” has no clear scientific answer, so we might just have to continue studying skin texture and muscle movements and pursue those things that feel off.

Project Development Group:Toyoshima,Kohiyama,Endo from the left

Project Development Group:Toyoshima,Kohiyama,Endo from the left