Digital Frontier

Header

Main

CG MAKING

Death Note (TV Series)

July 2015 [CG]

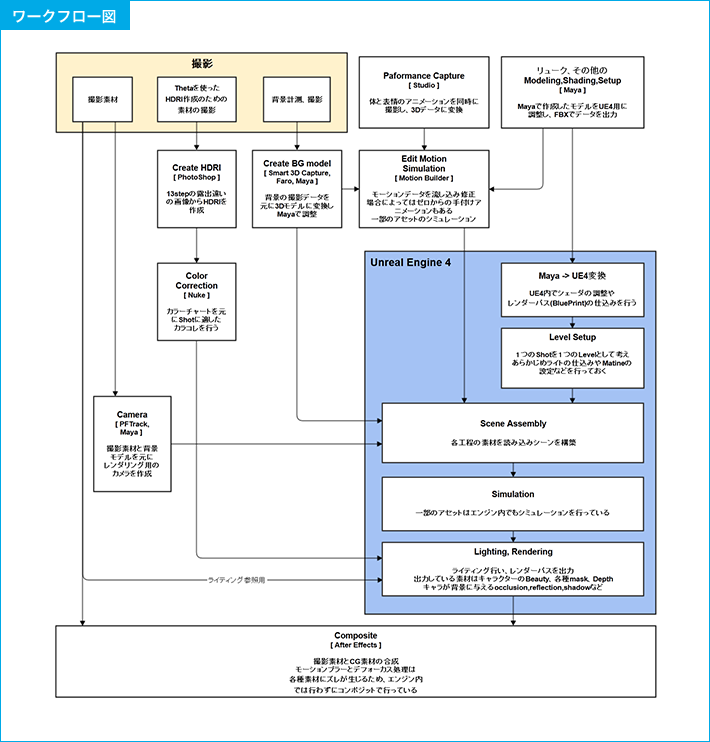

Using a Game Engine as Part of Program CG Production Process

We talked to Yasushi Yamaguchi from the Development Department and Hitoshi Miyake, who acts as a project leader in the CG production team, and Shinji Watanabe, who is responsible for the Unreal engine, about the production site. While they said that "This was the first time the team had undertaken a television series," they were able to bring CG characters to life in a weekly drama thanks to Unreal. The rendering that took tens of hours per frame when the movie was being produced can now be done in real time.

Challenge to See How Close We Could Get to the Level of Films From 10 Years Ago

The fact that we could do our work in real time until the end was the best part and something that we could not achieve with pre-rendering. Especially when it comes to lighting, rendering time is incredibly long. The fact that we were able to work with it in real time was a huge difference. Unreal can reduce some rendering processes, but compositing cannot be reduced. That said, we didn’t have much time to spend on composite processing, so there we had to cut down processing, which was painful. If the work flow fails at one point, it has a domino effect - the pressure in a minimal non-stop workflow was particularly extreme the delivery of the first episode. Three days prior to its broadcast, we were still doing retakes on about 30 cuts that were returned. At the time, worked through this with 3 or 4 people working at a pace of 2 cuts per hour.

In the compositing work, the original material (4K) had to be converted to 2K8bit before starting. The Ryuk material came out as 8bit and we had to match that, but it didn’t gel with a photograph (because resolution was low). Also, we were asked to increase the contrast around Ryuk and make everything brighter in consideration of the television screen because the director wanted Ryuk to appear clearer.

Continuing Through Trial and Error

We took a risk because we had to start at zero experience as we did our work, as we had selected a game engine that had not been used for little else aside from games in the past in order to speed up the process. The initial bout of trial and error we had to go through to fix bugs and polish everything was demanding. Accelerating rendering time through automatic calculations is a default feature in Unreal, so in scenes in which there is no character in the first frame, for example a scene in which a character suddenly appears on screen, the character wouldn't show up. This was a problem we had to deal with. It would appear in previews, but would disappear after rendering (laughs). The apple would disappear, the chain around the waist wouldn't appear, and other things would happen one after another. We were under an incredible amount of pressure when, the day prior to the target delivery date, we were still dealing with repeated problems with things not appearing.

It was more often that our conventional work flow for working with Maya didn’t flow when working in real time, rather than that some things couldn't be achieved with the engine. We tried switching to the engine instead of pre-rendering as part of our work flow. We created a work flow that involved no simulation in Maya and through which work was completed directly with Unreal and MotionBuilder. We create assets using Maya, and the subsequent processing is done mostly with MotionBuilder and we run them through Unreal. This simplified things and led to some problems in the overall workflow when this and that couldn't be done. Model data was basically refined movie data, which isn’t in a form optimized for games. We weren't prepared for what would happen when we took that and put it on Unreal.

We did a lot of stuff that is taboo in game production or without knowing some things wouldn’t simply be done.

What was the Evaluation of the Work Conducted With a Limited Workforce and Schedule?

Our work flow and level of experience improved as we completed episodes and we became able to do things more quickly and smoothly. We think this will also lead to better quality. We would love it if the Unreal development team developed a tool capable of output in serial with 16bit multipath. We expect that quality will inevitably go up with further developments of Unreal. The team went through many hard times in resolving problems and there were times when we were down in the dumps, but despite this, it would be nice if DF also developed an interest in real-time engine applications.

Even now, there are various ways of using Unreal's functions. Unreal was only used for characters in this case, but it could also be used for backgrounds. There are techniques to do this as it is a game engine, so if we learn to use this tool, CG could be integrated into video better and represented better. There are many ways that representation could be improved, but another company (game development company) deals with problems by using the engine without plugins and working creatively with Unreal data. In that respect, it would be good to try to improve by learning from others and find a flow that we can continue using in the future while still applying the DF workflow. In addition to adopting the approach of using the Unreal engine, we are also having the development team work on making the Unreal engine closer to pre-rendering software.

Designers' tension is apt to fade when quality isn't emphasized, but our team did their best in working on the program by trying to see "how high quality could go (within a limited time)." Given that we were working with television, our work was quickly broadcast, which got everyone excited and motivated them to work hard on the next installment. Everyone would keep pushing forward, recharging over the weekends and starting on a new job on Mondays.

It Wouldn't Have Been Achieved Had it Not Been for DF's Work Flow

Animation plays a huge role in CG production. Having a motion capture studio, the pipeline for creating animation without subcontracting has been formed. DF's strength is that we have an animation production team and use a game engine. This is one of the factors that allowed us to succeed with this project. Also, there aren't many production companies with a fully staffed R&D shot design team in Japan. Now that it has been demonstrated that a game engine can be used for CG video production instead of pre-rendering, there may be more opportunities to use CG animation in television programs in the future. Mr. Toyoshima says, "This is a great achievement in terms of bringing to the world something special that overturns conventional concepts."

WATA-

NABE

This was our first time working to create CG characters that interacted with actors occasionally and had a human-like appearance. While we wanted to take our time, we had to meet the demanding television production schedule. And the original work (manga) and movie came first, so we couldn't break from the universe of those. What we were striving for with the program was to see how close we could get to the level of movies 10 years ago rather than simply to emphasize quality, so the role of Unreal was to see how close we could get (to movie quality) within a limited time frame.